Splice & Studio One Integration – AI-based music workflows that I have talked about since 2022

Advertisement:

Another product idea of mine comes to reality. This time it’s by Splice. Over the past few years, in every talk that I have given online, I have mentioned the way creatives are thinking about AI & Machine Learning, especially those who are sceptical about it. An example I’d give is about Apple’s Logic Pro. I’d usually start with a food for thought:“What if the loops panel on the right in Logic Pro is showing you results based on the context of the music you’re making? What if AI is already involved? Would your selecting the first loop in that list be considered as ‘your choice’, or did AI nudge you to it?”

Edit (June 2024): I think “context based recommendation” is something the Logic Pro team is possibly already working on. It’s the most obvious feature to add as part of their Apple Intelligence branding that claims to “know what you’re doing”. Just in case they aren’t, I hope they read this article and consider it.

I’d then explain how ML has been in use in audio plugins for years, and the 'interaction' element is what makes creatives more comfortable in 'feeling' that they are making the creative decision. Machines have always assisted in some way, at least in creating the 'coincidence of finding a sound'. Technology is responsible for making new genres as much as the artists. There would be no Daft Punk if not for the synthesizer arps. Have fun listening to this track as you read the rest of the article. 🎶

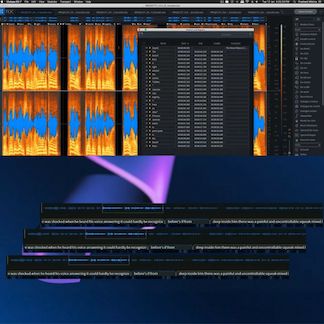

Looks like Splice liked the idea 😃 Their new integration with PreSonus Studio One’s side panel exactly overlaps with I’ve been talking & writing about. But then I also say in my talks, "somebody somewhere is already working on the idea you just had", so it wouldn't be fair to say my posts were the only (if at all) source of inspiration. 😉

While you still have to manually-click to use Splice’s “Search with Sound” feature, it wouldn’t have been a surprise if this part was automated for you so that the search results in the Splice side panel just shows what works with the track you’re making.

In that case the marketing would have been “we have a great recommendation engine” but then it’d have made users uncomfortable as to how do Splice & Studio One “know” the kind of music they are making. Maybe Image-Line's FL Studio can do this with their FL Cloud?

The data even now might go to Splice, but the mere requirement of a manual-click by the user makes them 'feel' that they are in control. Like I said many times before:

"When it comes to AI workflows in audio & music, UX & form factor is everything."

In 2022 I presented the Voice & Text sync tool (at The Audio Programmer's YouTube), that I made for the marker track in RX and then iZotope brought it as a feature, a year later (wrote about it on LinedIn here). And now Splice’s side panel in Studio One seems very much related to my other talks since 2022.

📌 I’ve posted many more feature ideas online & given more talks since then and I’m going to wait to see more products showing up. Maybe within next year. 😀

Do you have an idea that you want to see become a reality? Collaborate with a developer and make it happen. Audio Developer Conference (ADC) has a free online version this year on 1st November 2024 as ADCxGather. The main event, ADC24 is from 11-13 November 2024 with an amazing line up of talks, workshops & keynotes!

Share this article with anyone who would find this interesting. If you'd like to work with me on a new product idea, I'm just a Contact Me form away. 👋

Image Credits: Some of the pictures used in this article are taken from PreSonus' website.

Written by Prashant Mishra

Audio Developer Conference (ADC) | Game Audio India | National Institute of Design | Music Hack Day India | Music Tech Community | Previously contributed to School of Video Game Audio