AI, Creative Tools, Artists & the need for collaborations

Advertisement:

Update (Nov, 2025):

I've been actively advocating for the need of collaboration between creatives, artists and audio product creators. To facilitate this I actively contribute to not-for-profit initiatives such as the Audio Developer Conference (ADC24 is happening this November btw) to ensure that the dialogue between creators, researchers, students and users happen.

This has become even more important in the context of artificial intelligence (AI). An analogy I usually give is about synthesizers contributing to Daft Punk’s music. While synths are essentially electronic devices with signals flowing, it was the decision by synth makers to expose certain parameters that allowed artists to interact with them and create arpeggios, melodies and sequences that became part of their styles. As I've said in many of my talks:

Technology of an era has as much role to play in defining new sounds & genres as the artists. UI enables this.

Elements that now seem obvious – with so many aspects of the circuits, “exposing the LFO as a tweak-able parameter”, “addition of buttons for sequencing in drum machines” or “patch cables in modular systems” — are not just coincidences. They are UI elements and the tech-savvy artists found ways to create something timeliness with them.

Which of these do you think makes it easier for a creative to express herself?

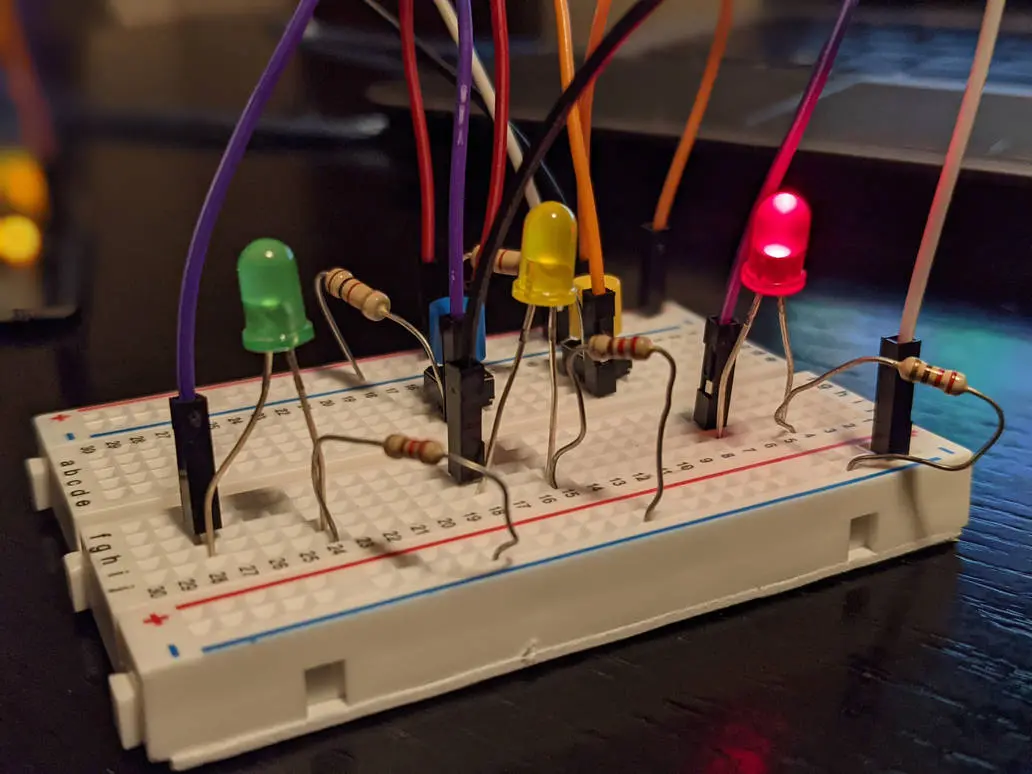

This:

or this:

While breadboards are fun too, it's clear that a musician would prefer the latter any day. Hold on to this thought as you read further.

Collaborations so far:

It would be naive to assume that news such as "Timbaland Becomes Strategic Advisor for AI Music Company Suno" or "How Jacob Collier helped shape the new MusicFX DJ" are a new phenomena in the audio industry. A lot has happened over the past few decades, particularly in the software world, that I think every audio & music tech enthusiast today must know about.

Hans Zimmer x uhe

This is the best example (and my favorite one!). Urs Heckmann, founder of uhe, has been a pioneer in making audio products, including many open source projects such as CLAP & dawProject. Being a musician himself, it's evident how uhe products helped many artists. Zebra, uhe's flagship plugin, got extra modules built on Hans Zimmer’s request and eventually that version of the synth was made available to the public as ZebraHZ. Remember Christopher Nolan's famous The Dark Knight? The music of it is all ZebraHZ. You can see Hans using it in his MasterClass series as well, and also in this BBC Studios video at 00:58:

Here's a track I had made when I first got my hands on this awesome plugin. You can clearly listen to the "The Dark Knight" vibe in it because I used the presets handcrafted by HZ that came with the plugin. There's the Batman arp, Bat flap sounds and more.

Linkin Park x Open Labs Stagelight:

In around 2013 (when many other audio tech companies came into existence (including Soundly, Soundtrap, Splice, Blend.io etc), Open Labs released their DAW, Stagelight (Linkin Park Edition). They had also created MusicOS earlier and were involved in helping Linkin Park with their live shows. Stagelight was showcased by Mike Shinoda (see video below) with example of LP's song Burn it Down from their album Living Things. I still so dearly keep Stagelight Linkin Park Edition installed in my system and use it now and then.

And here is a track I had created using this DAW when I was in college. MS even retweeted about it 🤗

Sound on Sound magazine had covered Stagelight in one of their articles. The DAW was later acquired by Roland and is now called Zenbeats.

Other notable collaborations:

Waves Audio x Andrew Scheps: If you're into audio tech, you'll definitely know these names. Waves collaborated with Andrew for plugins such as Scheps Omni Channel 2 bring ideas from a creative professional into making something that every music producer around the world benefited from.

Andrew is giving a keynote at the free ADCx Gather event on 1st November 2024 on the topic "Interfaces are King!A Practical Look at AI Audio Tools and What Professionals Actually Need"

Serum by xfer Records, while not created in collaboration with any artist, was created by the artist, DJ and producer, Steve Duda. Every electronic music producer in the world has used this plugin at some point in their work.

There are many more examples of such collaborations and it's important to learn and get inspired from them. Comment below your favorite ones.

What's happening now?

Well, to begin with, here are 2 items as food for thought:

- When computers were first introduced, there was scepticism for sure. But things would have been very different if they were called “robots” instead of "computers" in their advertisements. It would have caused much more chaos than it did. The "intelligence" keyword in the technology of today (that's supposedly termed as AI) is causing an unnecessary trouble. Most people don't know the workings of it, and never get to visibly see that it's all just maths.

- Every generation of people have poor affinity towards learning-curves. Some of it comes from the inertia to feel the need to learn, while the rest comes from the talk in the market. And looks like many of the age-wise young generation today has become uncomfortable for both of these reasons.

"Don't we have the most tech-savvy population of creatives ever?", one might ask. So why is it that AI tools are not seeing as much adoption? Here are possible reasons:

- There's a massive gap between key cohorts of people: the ones who are doing research, the ones who are making products, the ones who are funding projects, the ones who are using these tools to create art, and the consumers.

- (Remember the thought I asked you to hold on to?) The focus on UI is missing. It’s like electronics engineers spending all their time improving circuit boards, and no one is making synth boxes! People working on solving specific aspects of AI are like electronics engineers trying to make a better capacitor, etc. Jupyter notebooks are like the labs where cool stuff happens but it either never comes out of the lab, or when it does, it comes as a "make a wish" genie that no one knows how to control. Text to X in creative tools, imo, is a "make a wish" genie-workflow. It's pathetic! Eventually all this reaches end users NOT as products, but as news, packaged as conspiracy theories. 🤦♂️

- Disconnect between artists and developers: How would a developer know which parameters can be provided to artists? At least after 1st iteration of the product, the voice of creatives has to be heard. As of now, it’s completely out of picture. We need more Hans Zimmers of the world reaching out to more uhe's of the world. Please! 📢 Come to ADC this November. I'm keen on connecting with people who want to bring a change (Or reach out using the Contact Me form. I'm all ears)

- Unlike synths and electronics, AI models are more difficult to be “tamed”. They tend to create artefacts that are often unexpected and could be perceived as noise. But if you think about it, electronic synths can sometimes behave that way to. As compared to the sounds a guitar or any physical instrument makes – that are a direct correlation between human action & physics – synths can give results that don't seem normal. For example, change the feedback in a delay module too much and you start to hear “infinite” loops. This can never happened in a hardware guitar or violin, ever! But artists over the years found ways to creatively use these anomalies in synths. Unison is another parameter that got its love over time.

- AI Audio product creators are NOT musicians / performers / artists themselves. It's unfair to expect world's best developers to also be world's best musicians, and that's not what I'm saying either. But there needs to be at least one person at the C-level of the company that either understands music or has enough experience performing. It's not enough to be a "music lover" or a "song critique".

- Gaming industry often laughs at VR projects, primarily because they've been creating immersive experiences since the inception of 3D games. Generative music has also always been a crucial aspect of interactive music experiences in games – much before the AI hype got people excited about using input material (datasets) to be analysed as symbols and used to create something out of it. It's a shame that while game audio folks do so many collaborations with technical teams, they rarely indulge with audio developers who make products.

In my quick call with the Neutone CTO, Andrew Fyfe, we were discussing about how artists can use AI tools to their advantage, and he shared a live performance video of him using Neutone's Morpho audio plugin. He mentioned how their workflow includes ideating > creating > performing > iterating. I've rarely found the 'performing' part in any other AI-first audio company. Check out Andrew's performance (it's so cool!):

On the same note 🎵 Jacob Collier and Google’s MusicFX DJ collaboration is something worth noticing:

What next?

With creative AI tools, since the sonic output is even more wild, most people expect it to produce synth or hardware instrument replica, but they are missing the point – as Andrew had aptly mentioned in our chat. The technology can do much more. Give it a try.

The interaction between artists and AI tools has to happen. Companies making them should work with creatives, or have performers in their team. I absolutely love Neutone, and similar startups for the same reason. Being artists themselves, they build, perform and iterate.

Another analogy I prefer to mention is about pencils – a technology of its own. There are some who make them and there are those who use them creatively. But then there are also those who do research about the the materials and shapes (you can even choose to think of guitars). But at the end of the day, if we want the industry to grow all these people must start talking to each other! We now have enough breadboard circuit experts. We need more of those who understand the best of 3 worlds — creative, tech & product. Business & marketing will follow if a few great examples are set, and I'm glad Jacob is doing his part so well.

Written by Prashant Mishra

Audio Developer Conference (ADC) | Universal Category System (UCS) | Game Audio India | National Institute of Design | Music Hack Day India | Music Tech Community | Previously collaborated with & contributed to School of Video Game Audio | Disney Publishing Worldwide (DPW) | ISMIR | Osmo | Airwiggles | epic! and more